Company Blog

Building a Smarter, More Intuitive Search at Buyam

"When users can't find what they're looking for, the problem isn't always the product—it's often the search experience." —Team Buyam

Search in the Context of African eCommerce

In fast-growing online marketplaces like Buyam, connecting users with the right products quickly is crucial—not just for user satisfaction, but also for vendor success. From a user typing “red sandals for women” to another uploading a screenshot of shoes they liked on Instagram, search is the start of their journey. And that journey needs to feel intuitive.

Yet, the challenges are steep:

⦁ Catalogs evolve daily.

⦁ Product images often outnumber clean, well-tagged descriptions.

⦁ User queries can be vague, misspelled, or in multiple languages.

We needed a system that works not just for perfect queries, but for real-world behavior—across images, text, and intent.

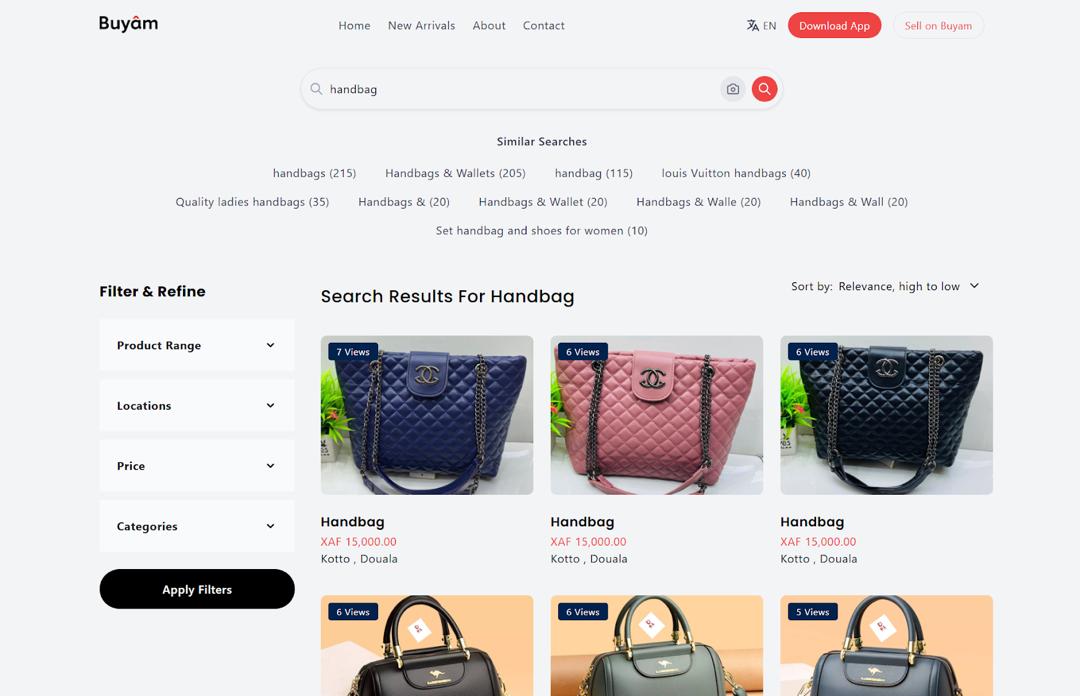

Towards a Unified Search System: Text + Image

We designed a two-track search architecture that supports:

⦁ Traditional text search for fast lookup of product names, features, vendors.

⦁ Image search for visually-led discovery using deep learning and vector embeddings.

The final experience is unified for users, regardless of how they start their search.

Buyam supports both text and image-based search seamlessly.

Text Search: Layering Relevance with Speed

Our text-based search system is built on top of Elasticsearch and tuned for relevance.

Key layers in the stack:

- Field-Level Indexing: We store and analyze fields like product name, description, vendor, and category.

- Query Preprocessing: Synonym expansion, typo-tolerant matching, and n-gram tokenization allow better handling of vague queries.

- Scoring Logic: We incorporate popularity, freshness, and geographic proximity to elevate the most useful results.

This lets someone typing “cheap phone with good camera” still land on a match—even if no product has that exact phrase.

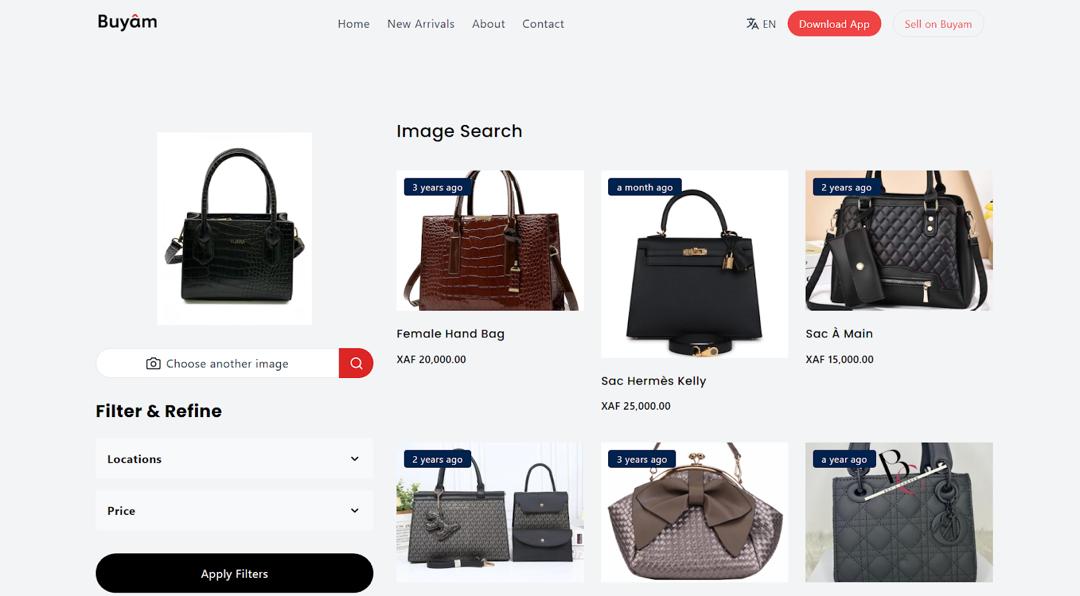

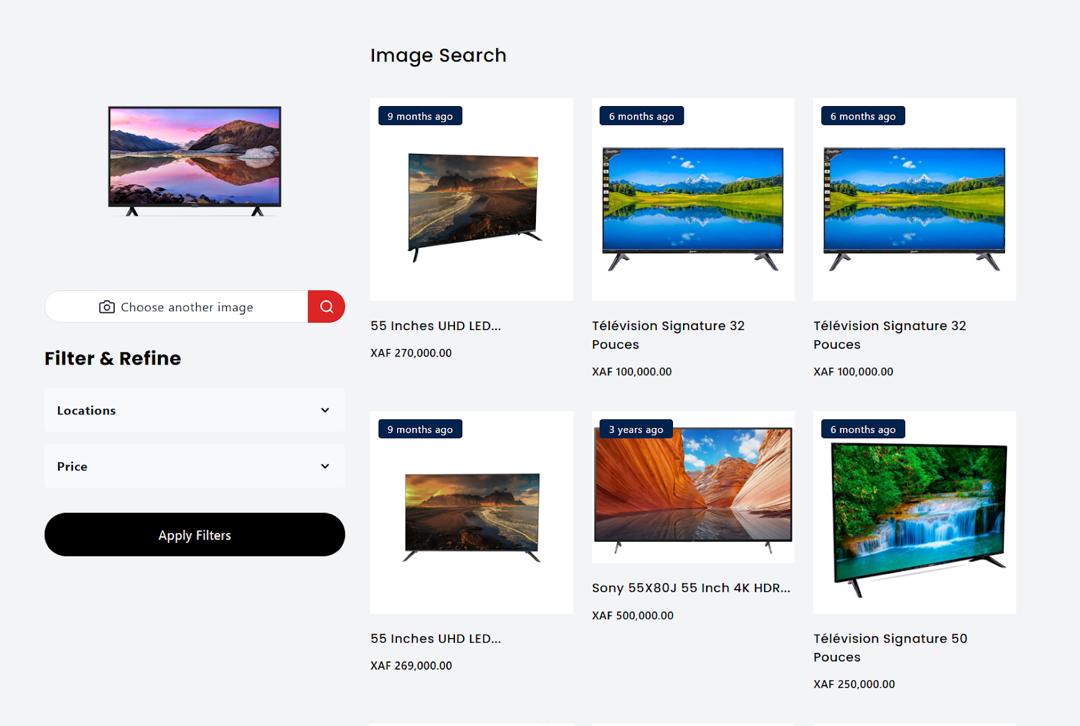

Visual Search: Understanding Images, Not Just Tags

Text works well when users know what to type. But what if they only have a picture?

Buyam’s visual search fills this gap. Users upload an image—anything from a product photo to a fashion screenshot—and get results that look visually similar from our catalog.

Under the hood:

- We use a lightweight, pre-trained convolutional model to convert product and uploaded images into feature vectors.

- These embeddings are indexed in Elasticsearch using the k-NN plugin.

- A visual similarity score is computed using vector distance (cosine or Euclidean) during retrieval.

This enables semantic image matching—the model doesn’t just look at color or shape but understands visual patterns and contexts.

Real-Time Indexing and Dynamic Scalability

One of our biggest engineering concerns was maintaining real-time searchability in a fast-changing product environment.

- New products are automatically processed—text fields indexed, image embeddings extracted—immediately after they’re added.

- Our Elasticsearch cluster scales dynamically with product volume and query load, keeping performance stable.

Monitoring and Feedback Loops

Search systems aren’t static. We continuously refine ranking logic using behavioral signals:

- What products get clicked after a search?

- What leads to add-to-cart or purchase?

- Where do users bounce?

These metrics help us prioritize what matters. For instance, if a visually-similar product doesn’t convert, we reassess its weight in the ranking pipeline.

What We Learned

- Relevance is multi-dimensional: Users care not just about textual match, but about visuals, pricing, vendor trust, and even delivery time.

- Image search drives exploration: Shoppers often discover new vendors or categories when searching by image.

- Latency matters: Fast feedback reinforces user trust. Optimizing vector search latency was key to adoption.

What’s Next?

Our roadmap focuses on making discovery even more intuitive:

- Multi-modal search: Combining text + image in one query for richer intent expression.

- Personalized search results: Tailoring rankings based on past interactions and preferences.

- Search-driven trend analytics: Helping vendors stock up on what’s trending based on real-time search logs.

Closing Thoughts

At Buyam, we believe that search isn’t just a backend feature—it’s part of the user’s experience, from curiosity to checkout. By bringing together deep learning, search infrastructure, and human behavior analysis, we’re reimagining what it means to "look for something online."

This is just the beginning.

Share Article: